Computer-Assisted Data Analysis: Benefits, Applications, and Challenges

How computers transform data analysis

Data analysis has undergone a revolutionary transformation with the advent of powerful computing systems. What erstwhile require tedious manual calculations and physical storage instantly happen with a few keystrokes. Computers have basically changed how we approach, process, and interpret data across every industry and research field.

The virtually immediate benefit is speed. Calculations that would take humans days or weeks to complete manually instantly finish in seconds. This dramatic acceleration enable analysis of larger datasets and more complex relationships than e’er ahead.

Source: hivedesk.com

Automation and efficiency gains

Computers excel at repeat identical processes without fatigue or error. This automation capability transforms data analysis in several key ways:

- Repetitive calculations execute systematically across millions of data points

- Data clean protocols apply uniform standards to entire datasets

- Schedule analyses run without human intervention

- Batch processing handle multiple datasets simultaneously

These automation feature free analysts from mechanical tasks and allow focus on interpretation and decision-making. A financial analyst who antecedent spend days compile quarterly reports can instantly generate them instantaneously and dedicate time to identify meaningful patterns and opportunities.

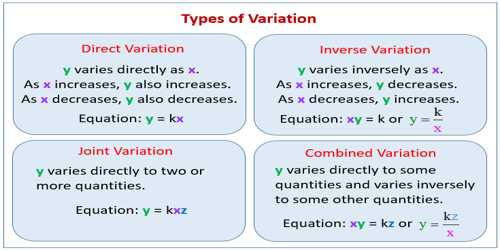

Advanced pattern recognition

Human perception have natural limits when identify patterns across large datasets. Computers overcome these limitations through:

- Statistical analysis algorithms that detect subtle correlations

- Machine learning models that identify complex, non-linear relationships

- Cluster techniques that group similar data points mechanically

- Anomaly detection systems that flag outliers and unusual patterns

These capabilities enable discoveries that would remain hidden to manual analysis. For instance, retail companies use these techniques to identify customer purchasing patterns that inform inventory decisions and marketing strategies.

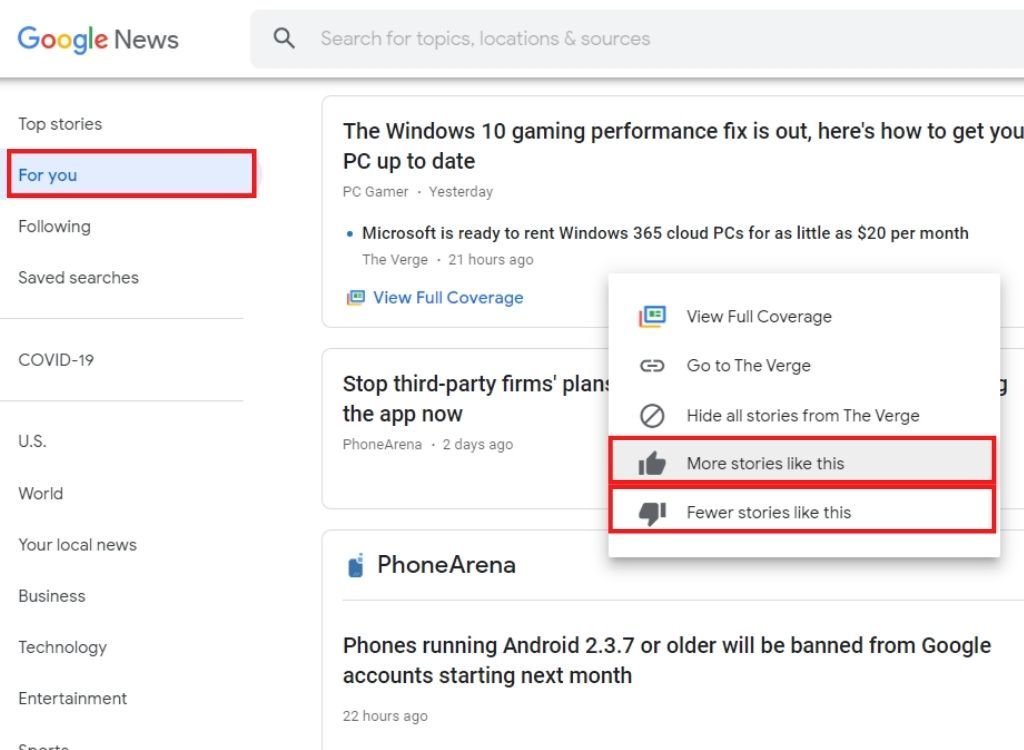

Visualization capabilities

Data visualization transform abstract numbers into intuitive visual representations. Modern computing enables:

- Interactive dashboards that respond to user queries

- Real time data updates reflect in visual displays

- Complex multidimensional visualizations

- Customizable charts and graphs for different audiences

These visual tools make data accessible to non-technical stakeholders and reveal insights that might be miss in numerical formats. Urban planners use visualization to understand traffic patterns, population density, and resource allocation across city maps.

Personal applications in data analysis

My journey with computer assist data analysis span various projects and methodologies. Each application has revealed both the transformative power of computing and its practical limitations.

Statistical analysis software

Programs like spas, r, and SAS have been invaluable for analyze research data. These platforms offer:

- Pre-build statistical tests for hypothesis validation

- Customizable analysis workflow

- Reproducible research protocols

- Advanced statistical modeling capabilities

When analyze survey responses for market research, these tools transform thousands of individual answers into actionable insights about consumer preferences. The ability to rapidly run correlation analyzes, regression models, and significance tests reveal relationships that inform product development decisions.

Spreadsheet applications

Despite their simplicity compare to dedicated statistical software, spreadsheet programs like excel and google sheets offer remarkable analytical power:

- Accessible formula base calculations

- Pivot table for dynamic data summarization

- Conditional formatting for visual pattern identification

- Basic charting and visualization options

For budget tracking and financial analysis, spreadsheets provide immediate feedback on spend patterns and projections. The ability to rapidly sort, filter, and reorganize data make these tools indispensable for preliminary analysis before move to more sophisticated platforms.

Database management systems

Work with larger datasets necessitate database systems like MySQL, PostgreSQL, or MongoDB. These systems offer:

- Efficient storage of massive datasets

- Query languages for precise data extraction

- Relational structures that maintain data integrity

- Multi-user access with permission controls

When analyze customer transaction records for a retail business, database systems make it possible to examine millions of purchase records across multiple years. SQL queries extract seasonal trends and product affinity patterns that would be impossible to identify manually.

Programming languages

Languages like python, r, and Julia offer unparalleled flexibility for custom analysis:

- Libraries specialize for different analytical needs (pandas, nNumPy ssci kitlearn)

- Custom function development for unique analytical requirements

- Integration with various data sources and formats

- Reproducible analysis through scripts and notebooks

For text analysis projects, python’s natural language processing libraries transform unstructured customer feedback into quantifiable sentiment scores and topic clusters. This automation process thousands of comments in minutes sooner than the weeks it’d take manually.

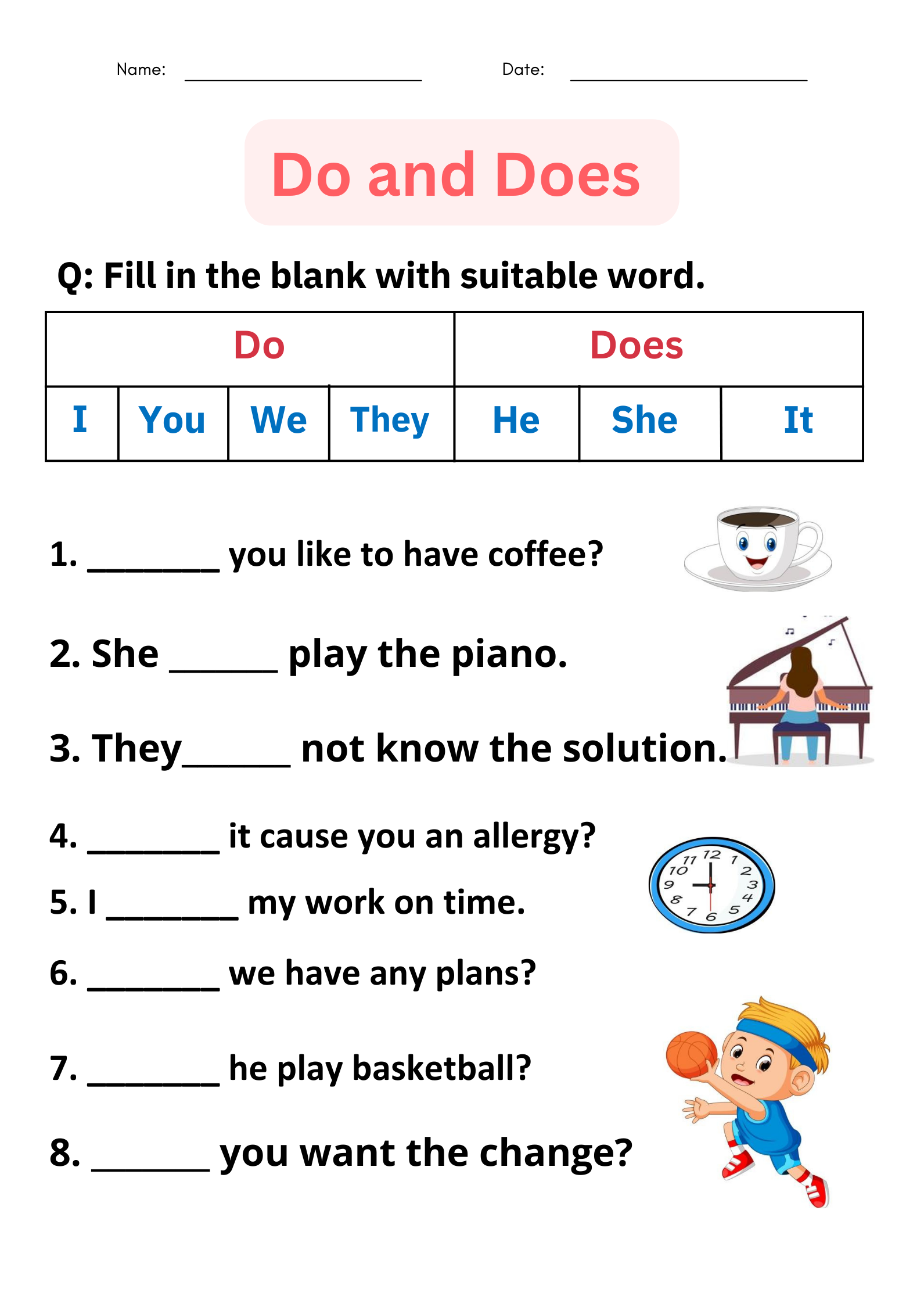

Common challenges in computer assisted analysis

Despite their power, computers introduce specific challenges to the data analysis process. Understand these limitations help develop more robust analytical approaches.

Data quality and preparation issues

The adage” garbage in, garbage out ” emain distressingly relevant in computational analysis:

- Miss values create calculation errors or skewed results

- Inconsistent formats require standardization before analysis

- Duplicate record distort counts and statistical measures

- Outliers disproportionately influence statistical calculations

When analyze healthcare outcomes data, inconsistent recording practices across different facilities create significant preparation challenges. Patient demographics enter in various formats require extensive cleaning before meaningful comparisons could be make.

Technical learning curves

Effective data analysis tools frequently come with steep learning curves:

- Programming languages require syntax memorization

- Statistical concepts must be understood for proper interpretation

- Software platforms have unique interfaces and workflows

- Advanced techniques require specialized knowledge

Learn r for statistical analysis initially slow productivity compare to familiar spreadsheet tools. The investment in learn syntax and package management finally pay off with more powerful analytical capabilities, but the transition period creates temporary inefficiencies.

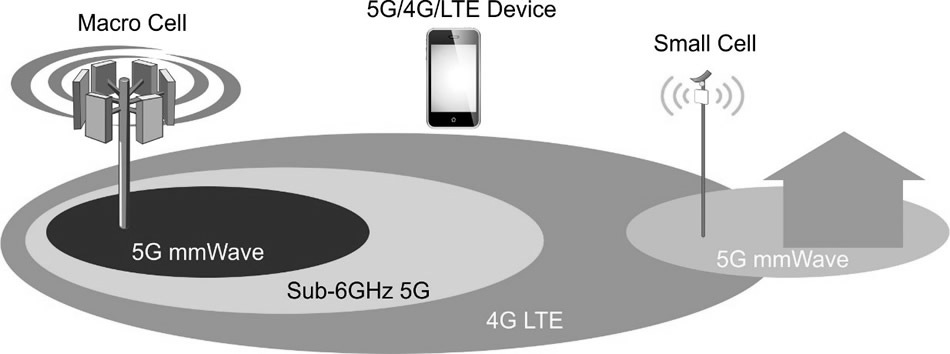

Computational limitations

Yet modern computers have practical limits:

- Memory constraints restrict dataset size for in memory processing

- Complex calculations may require prohibitive processing time

- Some algorithms scale peaked with larger datasets

- Specialized hardware may be need for certain analyses

When analyze high resolution satellite imagery for environmental monitoring, standard desktop computers struggle with the file sizes and processing requirements. Solutions require cloud compute resources and specialized algorithms design for distribute processing.

Interpretation challenge

Computers calculate utterly but lack contextual understanding:

- Statistical significance doesn’t invariably equal practical significance

- Correlation algorithms identify relationships without causation

- Black box models produce results without transparent reasoning

- Domain expertise remain essential for meaningful interpretation

When analyze educational assessment data, statistical models identify correlations between test scores and numerous variables. Without educational expertise to interpret these findings, it’d be easy to draw mislead conclusions about which factors really influence student performance.

Overcome data analysis challenges

Successful computer assist analysis require strategies to address common obstacles. These approaches combine technical solutions with methodological rigor.

Robust data preparation workflows

Develop systematic data preparation process minimize quality issues:

- Automated validation checks flag problematic values

- Standardized cleaning protocols ensure consistency

- Documentation of data transformations maintain transparency

- Version control track changes to datasets

Create reusable data clean scripts for customer relationship management data ensure consistent preparation disregarding of who perform the analysis. This approach reduce errors and save significant time across multiple projects.

Continuous learning strategies

Address technical knowledge gaps require ongoing education:

- Online courses provide structured learning paths

- Community forums offer solutions to specific problems

- Documentation and tutorials explain tool functionality

- Practice projects build practical experience

Set by dedicated time each week for learning new analytical techniques gradually expand capabilities. Start with basic descriptive statistics in python before progress to machine learning models create a manageable learning progression.

Scalable computing solutions

Computational limitations can be address through various approaches:

- Cloud computing provide on demand resources

- Distribute processing divide work across multiple machines

- Efficient algorithms reduce computational requirements

- Sample techniques work with representative data subsets

When local processing couldn’t handle social media data analysis volume, move to cloud base processing solve the bottleneck. This approach allow scale resources base on dataset size without invest in expensive hardware.

Collaborative interpretation

Combine technical and domain expertise improve interpretation:

Source: katutekno.com

- Cross-functional teams bring diverse perspectives

- Visualization make technical results accessible to domain experts

- Iterative analysis incorporate feedback from stakeholders

- Multiple analytical approaches validate findings

Partner with subject-matter experts when analyze manufacturing process data provide crucial context for statistical findings. Their practical knowledge help distinguish between statistically significant patterns that have real operational impact versus those that were mathematical artifacts.

The future of computer assisted data analysis

Emerge technologies continue to transform data analysis capabilities and approaches. These developments promise to address current limitations while open new analytical possibilities.

Artificial intelligence and machine learning

Ai power analysis tools are progressively accessible:

- Automate machine learn platforms require minimal technical expertise

- Natural language interfaces allow query base analysis

- Predictive capabilities identify future trends and outcomes

- Computer vision analyze image and video data

These technologies democratize advanced analysis techniques while handle progressively complex data types. The ability to process unstructured data like text, images, and audio expand the scope of what can be analyzed computationally.

Real time analysis capabilities

The shift from batch processing to real time analysis change decision make timelines:

- Stream processing handles continuous data flow

- Edge computing analyze data at collection points

- Instant alerts identify critical patterns instantly

- Adaptive systems respond mechanically to change conditions

These capabilities transform reactive analysis into proactive decision-making. Systems that endlessly monitor and analyze data can identify opportunities and threats as they emerge instead than in retrospective reports.

Democratized data tools

Analysis capabilities are become more accessible to non-technical users:

- No code platforms offer drag and drop analysis

- Automated reporting generate insights in plain language

- Intuitive interfaces reduce technical barriers

- Embed analytics integrate into everyday applications

These tools extend analytical capabilities throughout organizations kinda than limit them to specialize data teams. This democratization allows domain experts to explore data direct without technical intermediaries.

Conclusion

Computers have revolutionized data analysis by dramatically increase speed, scale, and complexity of what canbe analyzede. From basic spreadsheets to advanced machine learning algorithm, these tools transform raw data into actionable insights across every field and industry.

The journey of computer assist analysis come with challenges — from data quality issues to technical learning curves and computational limitations. Still, systematic approaches to data preparation, continuous learning, scalable computing solutions, and collaborative interpretation can overcome these obstacles.

As artificial intelligence, real time processing, and democratize tools continue to evolve, the relationship between humans and computers in data analysis grow progressively symbiotic. Computers handle mechanical tasks, pattern recognition, and process at scales beyond human capability, while human analysts provide context, interpretation, and creative problem-solving.

This partnership between human insight and computational power represent the virtually effective approach to modern data analysis — combine the best of both worlds to reveal meaningful patterns in a progressively data rich environment.

MORE FROM feelmydeal.com